Machine learning and other gibberish

See also: https://sharing.leima.is

Archives: https://datumorphism.leima.is/amneumarkt/

See also: https://sharing.leima.is

Archives: https://datumorphism.leima.is/amneumarkt/

#ML

Simple algorithm, powerful results

https://avinayak.github.io/algorithms/programming/2021/02/19/finding-mona-lisa-in-the-game-of-life.html

Simple algorithm, powerful results

https://avinayak.github.io/algorithms/programming/2021/02/19/finding-mona-lisa-in-the-game-of-life.html

#ML

I just found an elegant decision tree visualization package for sklearn.

I have been trying to explain decision tree results to many business people. It is very hard. This package makes it much easier to explain the results to a non-techinical person.

https://github.com/parrt/dtreeviz

I just found an elegant decision tree visualization package for sklearn.

I have been trying to explain decision tree results to many business people. It is very hard. This package makes it much easier to explain the results to a non-techinical person.

https://github.com/parrt/dtreeviz

#fun

> Growth in data science interviews plateaued in 2020. Data science interviews only grew by 10% after previously growing by 80% year over year.

> Data engineering specific interviews increased by 40% in the past year.

https://www.interviewquery.com/blog-data-science-interview-report

> Growth in data science interviews plateaued in 2020. Data science interviews only grew by 10% after previously growing by 80% year over year.

> Data engineering specific interviews increased by 40% in the past year.

https://www.interviewquery.com/blog-data-science-interview-report

#ML #Phyiscs

The easiest method to apply constraints to a dynamical system is through Lagrange multiplier, aka, penalties in statistical learning. Penalties don't guarantee any conservation laws as they are simply penalties, unless you find the multiplers carrying some physical meaning like what we have in Boltzmann statistics.

This paper explains a simple method to hardcode conservation laws in a Neural Network architecture.

Paper:

https://journals.aps.org/prl/abstract/10.1103/PhysRevLett.126.098302

TLDR:

See the attached figure. Basically, the hardcoded conservation is realized using additional layers after the normal neural network predictions.

A quick bite of the paper: https://physics.aps.org/articles/v14/s25

Some thoughts:

I like this paper. When physicists work on problems, they like dimensionlessness. This paper follows this convention. This is extremely important when you are working on a numerical problem. One should always make it dimensionless before implementing the equations in code.

The easiest method to apply constraints to a dynamical system is through Lagrange multiplier, aka, penalties in statistical learning. Penalties don't guarantee any conservation laws as they are simply penalties, unless you find the multiplers carrying some physical meaning like what we have in Boltzmann statistics.

This paper explains a simple method to hardcode conservation laws in a Neural Network architecture.

Paper:

https://journals.aps.org/prl/abstract/10.1103/PhysRevLett.126.098302

TLDR:

See the attached figure. Basically, the hardcoded conservation is realized using additional layers after the normal neural network predictions.

A quick bite of the paper: https://physics.aps.org/articles/v14/s25

Some thoughts:

I like this paper. When physicists work on problems, they like dimensionlessness. This paper follows this convention. This is extremely important when you are working on a numerical problem. One should always make it dimensionless before implementing the equations in code.

#event

If you are interested in free online AI Cons, Bosch CAI is organizing the AI Con 2021.

This event starts tomorrow.

https://www.ubivent.com/start/AI-CON-2021

If you are interested in free online AI Cons, Bosch CAI is organizing the AI Con 2021.

This event starts tomorrow.

https://www.ubivent.com/start/AI-CON-2021

#ML

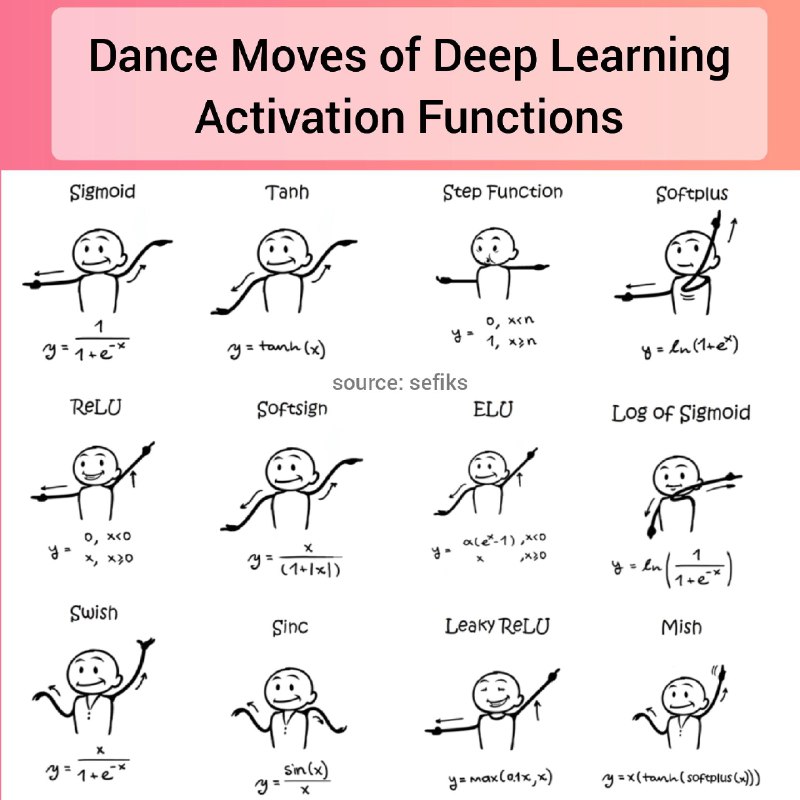

Haha

Deep Learning Activation Functions using Dance Moves

https://www.reddit.com/r/learnmachinelearning/comments/lvehmi/deep_learning_activation_functions_using_dance/?utm_medium=android_app&utm_source=share

Haha

Deep Learning Activation Functions using Dance Moves

https://www.reddit.com/r/learnmachinelearning/comments/lvehmi/deep_learning_activation_functions_using_dance/?utm_medium=android_app&utm_source=share

#DataScience

Ah I have always been thinking about writing a book like this.

Just bought the book to educate myself on communications.

https://andrewnc.github.io/blog/everyday_data_science.html

Ah I have always been thinking about writing a book like this.

Just bought the book to educate myself on communications.

https://andrewnc.github.io/blog/everyday_data_science.html

#ML

note2self:

From ref 1

> we can take any expected utility maximization problem, and decompose it into an entropy minimization term plus a “make-the-world-look-like-this-specific-model” term.

This view should be combined with ref 2. If the utility is related to the curvature of the discrete state space, we are making a connection between entropy + KL divergence and curvature on graph. (This idea has to be polished in depth.)

Refs:

1. Trivial proof but interesting perspective: https://www.lesswrong.com/posts/voLHQgNncnjjgAPH7/utility-maximization-description-length-minimization

2. Samal Areejit, Pharasi Hirdesh K., Ramaia Sarath Jyotsna, Kannan Harish, Saucan Emil, Jost Jürgen and Chakraborti Anirban 2021Network geometry and market instabilityR. Soc. open sci.8201734. http://doi.org/10.1098/rsos.201734

note2self:

From ref 1

> we can take any expected utility maximization problem, and decompose it into an entropy minimization term plus a “make-the-world-look-like-this-specific-model” term.

This view should be combined with ref 2. If the utility is related to the curvature of the discrete state space, we are making a connection between entropy + KL divergence and curvature on graph. (This idea has to be polished in depth.)

Refs:

1. Trivial proof but interesting perspective: https://www.lesswrong.com/posts/voLHQgNncnjjgAPH7/utility-maximization-description-length-minimization

2. Samal Areejit, Pharasi Hirdesh K., Ramaia Sarath Jyotsna, Kannan Harish, Saucan Emil, Jost Jürgen and Chakraborti Anirban 2021Network geometry and market instabilityR. Soc. open sci.8201734. http://doi.org/10.1098/rsos.201734

You can even use Chinese in GitHub Codespaces. 😱

Well this is trivial if you have Chinese input methods on your computer. What if you are using a company computer and you would like to add some Chinese comments just for fun....

#fun

Interesting talk on the softwares used by Apollo.

https://media.ccc.de/v/34c3-9064-the_ultimate_apollo_guidance_computer_talk#t=3305

Interesting talk on the softwares used by Apollo.

https://media.ccc.de/v/34c3-9064-the_ultimate_apollo_guidance_computer_talk#t=3305

#ML

The new AI spring: a deflationary view

It's actually fun to watch philosophers fighting each other.

The author is trying to deflate the inflated expectations on AI by looking into why inflated expectations are harming our society. It's not exactly based on evidence but still quite interesting to read.

| SpringerLink

https://link.springer.com/article/10.1007/s00146-019-00912-z

The new AI spring: a deflationary view

It's actually fun to watch philosophers fighting each other.

The author is trying to deflate the inflated expectations on AI by looking into why inflated expectations are harming our society. It's not exactly based on evidence but still quite interesting to read.

| SpringerLink

https://link.springer.com/article/10.1007/s00146-019-00912-z

#neuroscience

Definitely weird. The authors used DNN to capture the firing behaviors of cortical neurons.

- A single hidden layer DNN (can you even call it Deep NN in this case?) can capture the neuronal activity without NMDA but with AMPA.

- With NMDA, the neuron requires more than 1 layer.

This paper *stops* here.

WTH this is?

Let's go back to the foundations of statistical learning. What the author is looking for is a separation of "stimulation" space. The "stimulation" space is basically a very simple time series (Poissonic) space. We just need to map inputs back to the same space but with different feature values. Since the feature space is so small, we will absolutely fit everything if we increase the expressing power of the DNN.

The thing is, we already know that NMDA-based synapses require more expressing power and we have very interpretable and good mathematical models for this... This research provides neither better predictability nor interpretability. Well done...

Maybe you have different opinions, prove me wrong.

https://www.biorxiv.org/content/10.1101/613141v2

Definitely weird. The authors used DNN to capture the firing behaviors of cortical neurons.

- A single hidden layer DNN (can you even call it Deep NN in this case?) can capture the neuronal activity without NMDA but with AMPA.

- With NMDA, the neuron requires more than 1 layer.

This paper *stops* here.

WTH this is?

Let's go back to the foundations of statistical learning. What the author is looking for is a separation of "stimulation" space. The "stimulation" space is basically a very simple time series (Poissonic) space. We just need to map inputs back to the same space but with different feature values. Since the feature space is so small, we will absolutely fit everything if we increase the expressing power of the DNN.

The thing is, we already know that NMDA-based synapses require more expressing power and we have very interpretable and good mathematical models for this... This research provides neither better predictability nor interpretability. Well done...

Maybe you have different opinions, prove me wrong.

https://www.biorxiv.org/content/10.1101/613141v2

#fun

We have been testing a new connected online work space using discord. Whoever is bored by home office can connect to a shared channel and chat.

Discord allows team voice chat and multiple screensharing. By adding bots to the channel, the team can share music playlists. Discord allows detailed adjustment of the voices so anyone could adjust volumes of any other users or even deafen himself/herself. So it is possible to be connected for the whole day.

It seems that jump in and chat at anytime and share working screen make it fun for WFH.

We have been testing a new connected online work space using discord. Whoever is bored by home office can connect to a shared channel and chat.

Discord allows team voice chat and multiple screensharing. By adding bots to the channel, the team can share music playlists. Discord allows detailed adjustment of the voices so anyone could adjust volumes of any other users or even deafen himself/herself. So it is possible to be connected for the whole day.

It seems that jump in and chat at anytime and share working screen make it fun for WFH.

#TIL

My cheerful price for the work I am currently doing is very high...

https://www.lesswrong.com/posts/MzKKi7niyEqkBPnyu/your-cheerful-price

My cheerful price for the work I am currently doing is very high...

https://www.lesswrong.com/posts/MzKKi7niyEqkBPnyu/your-cheerful-price

#productivity

I find vscode remote-ssh very helpful. For some projects with frequent maintenance fixes, I prepared all the required environment on a remote server. I only need to click on the remote-ssh connection to connect to this remote server and immediately start my work.

This low overhead setup makes me less reluctant to fix stuff.

It is also possible to connect to Docker containers. By setting up different containers we can work in completely different environments with a few clicks.

This is crazy.

I find vscode remote-ssh very helpful. For some projects with frequent maintenance fixes, I prepared all the required environment on a remote server. I only need to click on the remote-ssh connection to connect to this remote server and immediately start my work.

This low overhead setup makes me less reluctant to fix stuff.

It is also possible to connect to Docker containers. By setting up different containers we can work in completely different environments with a few clicks.

This is crazy.