Machine learning and other gibberish

See also: https://sharing.leima.is

Archives: https://datumorphism.leima.is/amneumarkt/

See also: https://sharing.leima.is

Archives: https://datumorphism.leima.is/amneumarkt/

#data

In physics, people claim that more is different. In the data world, more is very different. I'm no expert in big data, but I learned the scaling problem only when I started working for corporates.

I like the following from the author.

> data sizes increase much faster than compute sizes.

In deep learning, many models are following a scaling law of performance and dataset size. Indeed, more data brings in better performance. But the increase in performance becomes really slow. Business doesn't need a perfect model. We also know computation costs money. At some point, we simply have to cut the dataset, even if we have all the data in the world.

So ..., data hoarding is probably fine, but our models might not need that much.

https://motherduck.com/blog/big-data-is-dead/

In physics, people claim that more is different. In the data world, more is very different. I'm no expert in big data, but I learned the scaling problem only when I started working for corporates.

I like the following from the author.

> data sizes increase much faster than compute sizes.

In deep learning, many models are following a scaling law of performance and dataset size. Indeed, more data brings in better performance. But the increase in performance becomes really slow. Business doesn't need a perfect model. We also know computation costs money. At some point, we simply have to cut the dataset, even if we have all the data in the world.

So ..., data hoarding is probably fine, but our models might not need that much.

https://motherduck.com/blog/big-data-is-dead/

#ml

google-research/tuning_playbook: A playbook for systematically maximizing the performance of deep learning models.

https://github.com/google-research/tuning_playbook

google-research/tuning_playbook: A playbook for systematically maximizing the performance of deep learning models.

https://github.com/google-research/tuning_playbook

#ml

Haha icecube

IceCube - Neutrinos in Deep Ice | Kaggle

https://www.kaggle.com/competitions/icecube-neutrinos-in-deep-ice?utm_medium=email&utm_source=gamma&utm_campaign=comp-icecube-2023

Haha icecube

IceCube - Neutrinos in Deep Ice | Kaggle

https://www.kaggle.com/competitions/icecube-neutrinos-in-deep-ice?utm_medium=email&utm_source=gamma&utm_campaign=comp-icecube-2023

#data

Just got my ticket.

I have been reviewing proposals for PyData this year. I saw some really cool proposals so I finally decided to attend the conference.

https://2023.pycon.de/blog/pyconde-pydata-berlin-tickets/

Just got my ticket.

I have been reviewing proposals for PyData this year. I saw some really cool proposals so I finally decided to attend the conference.

https://2023.pycon.de/blog/pyconde-pydata-berlin-tickets/

#misc

Lastpass was hacked and the hacker obtained already.

https://blog.lastpass.com/2022/12/notice-of-recent-security-incident/

Lastpass was hacked and the hacker obtained already.

https://blog.lastpass.com/2022/12/notice-of-recent-security-incident/

#ml

GPT writing papers... Both fancy and scary.

https://huggingface.co/stanford-crfm/pubmedgpt?text=Neuroplasticity

GPT writing papers... Both fancy and scary.

https://huggingface.co/stanford-crfm/pubmedgpt?text=Neuroplasticity

#data

https://evidence.dev/

I like the idea. My last dashboarding tool for work was streamlit. Streamlit is lightweight and fast. But it requires Python code and a Python server.

Evidence is mostly markdown and SQL. For many lightweight dashboarding tasks, this is just sweet.

Evidence is built on node. I could run a server and provide live updates but I can already build a static website by running

Played with it a bit. Nothing to complain about at this point.

https://evidence.dev/

I like the idea. My last dashboarding tool for work was streamlit. Streamlit is lightweight and fast. But it requires Python code and a Python server.

Evidence is mostly markdown and SQL. For many lightweight dashboarding tasks, this is just sweet.

Evidence is built on node. I could run a server and provide live updates but I can already build a static website by running

npm run build.Played with it a bit. Nothing to complain about at this point.

#visualization

Visualizations of energy consumption and prices in Germany. Given the low temperature atm, it maybe interesting to watch them evolve.

https://www.zeit.de/wirtschaft/energiemonitor-deutschland-gaspreis-spritpreis-energieversorgung

Visualizations of energy consumption and prices in Germany. Given the low temperature atm, it maybe interesting to watch them evolve.

https://www.zeit.de/wirtschaft/energiemonitor-deutschland-gaspreis-spritpreis-energieversorgung

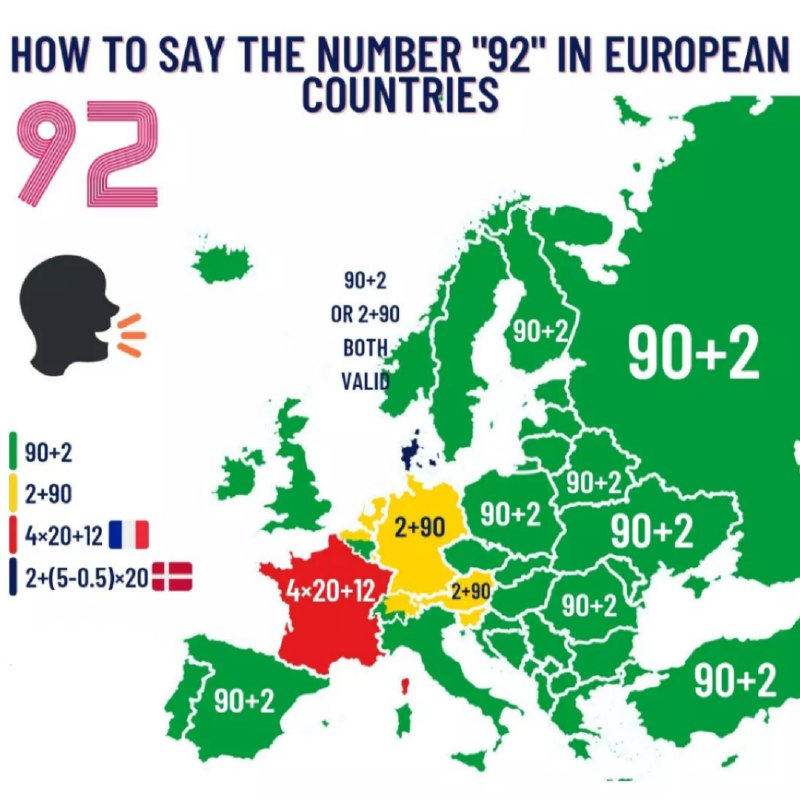

#fun

Denmark...

I thought French was complicated, now we all know Danish leads the race.

https://www.reddit.com/r/europe/comments/zo258s/how_to_say_number_92_in_european_countries/

Denmark...

I thought French was complicated, now we all know Danish leads the race.

https://www.reddit.com/r/europe/comments/zo258s/how_to_say_number_92_in_european_countries/

In his MinT paper, Hyndman said he confused these two quantities in his previous paper. 😂

MinT is a simple method to make forecasts with hierarchical structure coherent. Here coherent means the sum of the lower level forecasts equals the higher level forecasts.

For example, our time series has a strucutre like sales of coca cola + sales of spirit = sales of beverages. If this relations holds for our forecasts, we have coherent forecasts.

This may sound trivial, the problem is in fact hard. There are many trivial methods such as only forecasting lower levels (coca cola, spirit) then use the sum as the higher level (sales of beverages). These are usually too naive to be effective.

MinT is a reconciliation method that combines high level forecasts and the lower level forecasts to find an optimal combination/reconciliation.

https://robjhyndman.com/papers/MinT.pdf

#ml

You spent 10k euros on GPU then realized the statistical baseline model is better. 🤣

https://github.com/Nixtla/statsforecast/tree/main/experiments/m3

You spent 10k euros on GPU then realized the statistical baseline model is better. 🤣

https://github.com/Nixtla/statsforecast/tree/main/experiments/m3