Machine learning and other gibberish

See also: https://sharing.leima.is

Archives: https://datumorphism.leima.is/amneumarkt/

See also: https://sharing.leima.is

Archives: https://datumorphism.leima.is/amneumarkt/

I ran into this hilarious comment on pie chart in a book called The Grammar of Graphics.

“To prevent bias, give

the child the knife and someone else the first choice of slices.” 😱😱😱

#tools #writing

https://www.losethevery.com/

> "Very good english" is not very good english. Lose the very.

https://www.losethevery.com/

> "Very good english" is not very good english. Lose the very.

#datascience #career #academia

> I regret quitting astrophysics

https://news.ycombinator.com/item?id=25444069

http://www.marcelhaas.com/index.php/2020/12/16/i-regret-quitting-astrophysics/

me too 😂 though not an astrophysicist, I miss academia too

> I regret quitting astrophysics

https://news.ycombinator.com/item?id=25444069

http://www.marcelhaas.com/index.php/2020/12/16/i-regret-quitting-astrophysics/

me too 😂 though not an astrophysicist, I miss academia too

#datascience #audliolization

This is the audiolization of the daily new cases for FR, IT, ES, DE, PL between 2020-08-01 and 2020-12-14. I made an audiolization video two years ago. As I am currently under quarantine and the days are becoming so boring, I started to think about the mapping of data points to different representations. We usually talk about visualization because there are so many elements to be used to represent complicated data. Audiolization, on the other hand, leaves us with very few elements to encode. But it's a lot of fun working with audio. So I wrote a python package to map a pandas dataframe/numpy ndarray to midi representation. Here is the package https://github.com/emptymalei/audiorepr

This is the audiolization of the daily new cases for FR, IT, ES, DE, PL between 2020-08-01 and 2020-12-14. I made an audiolization video two years ago. As I am currently under quarantine and the days are becoming so boring, I started to think about the mapping of data points to different representations. We usually talk about visualization because there are so many elements to be used to represent complicated data. Audiolization, on the other hand, leaves us with very few elements to encode. But it's a lot of fun working with audio. So I wrote a python package to map a pandas dataframe/numpy ndarray to midi representation. Here is the package https://github.com/emptymalei/audiorepr

#machinelearning

https://arxiv.org/abs/2007.04504

Learning Differential Equations that are Easy to Solve

Jacob Kelly, Jesse Bettencourt, Matthew James Johnson, David Duvenaud

Differential equations parameterized by neural networks become expensive to solve numerically as training progresses. We propose a remedy that encourages learned dynamics to be easier to solve. Specifically, we introduce a differentiable surrogate for the time cost of standard numerical solvers, using higher-order derivatives of solution trajectories. These derivatives are efficient to compute with Taylor-mode automatic differentiation. Optimizing this additional objective trades model performance against the time cost of solving the learned dynamics. We demonstrate our approach by training substantially faster, while nearly as accurate, models in supervised classification, density estimation, and time-series modelling tasks.

https://arxiv.org/abs/2007.04504

Learning Differential Equations that are Easy to Solve

Jacob Kelly, Jesse Bettencourt, Matthew James Johnson, David Duvenaud

Differential equations parameterized by neural networks become expensive to solve numerically as training progresses. We propose a remedy that encourages learned dynamics to be easier to solve. Specifically, we introduce a differentiable surrogate for the time cost of standard numerical solvers, using higher-order derivatives of solution trajectories. These derivatives are efficient to compute with Taylor-mode automatic differentiation. Optimizing this additional objective trades model performance against the time cost of solving the learned dynamics. We demonstrate our approach by training substantially faster, while nearly as accurate, models in supervised classification, density estimation, and time-series modelling tasks.

#science

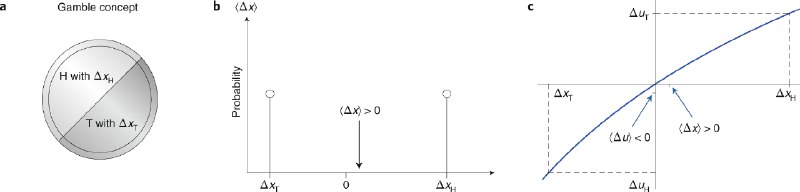

The ergodicity problem in economics | Nature Physics

https://www.nature.com/articles/s41567-019-0732-0

I read another paper about hot hand/gamblers' fallacy a while ago and the author of that paper took a similar view. Here is the article:

Surprised by the Hot Hand Fallacy ? A Truth in the Law of Small Numbers by Miller

The ergodicity problem in economics | Nature Physics

https://www.nature.com/articles/s41567-019-0732-0

I read another paper about hot hand/gamblers' fallacy a while ago and the author of that paper took a similar view. Here is the article:

Surprised by the Hot Hand Fallacy ? A Truth in the Law of Small Numbers by Miller

https://arxiv.org/abs/2012.04863

Skillearn: Machine Learning Inspired by Humans' Learning Skills

Interesting idea. I didn't know interleaving is already being used in ML.

https://events.ccc.de/2020/09/04/rc3-remote-chaos-experience/

CCC is hosting the event for 2020 fully online. Everyone can join with a pay-as-you-wish ticket. Join if you like programming, hacking, social events, learning something crazy and new. 👍👍👍

CCC is hosting the event for 2020 fully online. Everyone can join with a pay-as-you-wish ticket. Join if you like programming, hacking, social events, learning something crazy and new. 👍👍👍

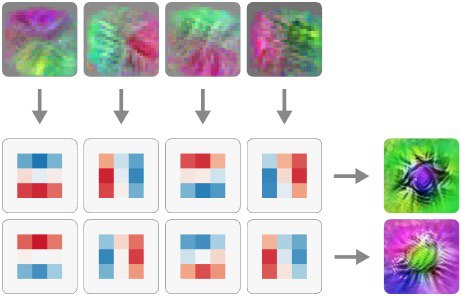

Naturally Occurring Equivariance in Neural Networks

https://distill.pub/2020/circuits/equivariance

https://distill.pub/2020/circuits/equivariance

#ML #paper

https://arxiv.org/abs/2012.00152

Every Model Learned by Gradient Descent Is Approximately a Kernel Machine

Deep learning's successes are often attributed to its ability to automatically discover new representations of the data, rather than relying on handcrafted features like other learning methods.

https://arxiv.org/abs/2012.00152

Every Model Learned by Gradient Descent Is Approximately a Kernel Machine

Deep learning's successes are often attributed to its ability to automatically discover new representations of the data, rather than relying on handcrafted features like other learning methods.

A new search engine by a former chief scientist who helped developing the AI platform Einstein for Salesforce.

The new search engine is called "you".

https://you.com/?refCode=5ac0f0ea

The new search engine is called "you".

https://you.com/?refCode=5ac0f0ea