Machine learning and other gibberish

See also: https://sharing.leima.is

Archives: https://datumorphism.leima.is/amneumarkt/

See also: https://sharing.leima.is

Archives: https://datumorphism.leima.is/amneumarkt/

#ml

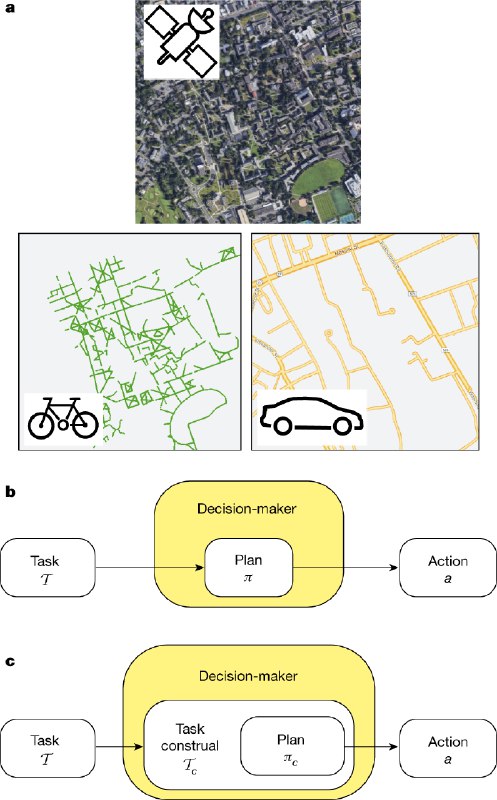

Parsimony with cognitive resource limitations 🤔

https://www.nature.com/articles/s41586-022-04743-9

Parsimony with cognitive resource limitations 🤔

https://www.nature.com/articles/s41586-022-04743-9

#github

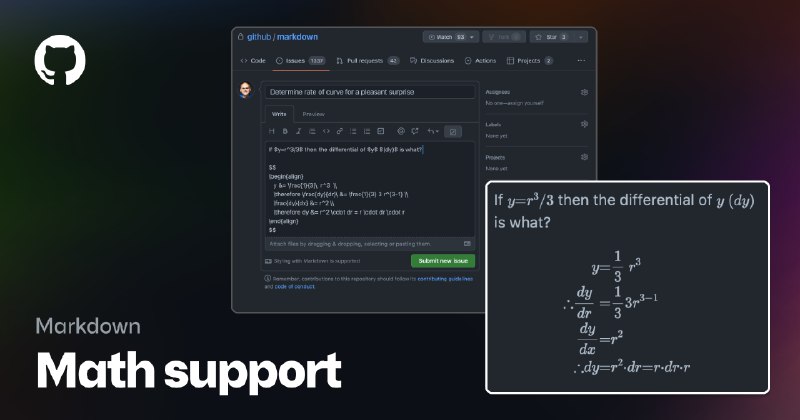

I have been following an issue on math support for github markdown (github/markup/issues/274).

One thousand years later ...

Math support in Markdown | The GitHub Blog

https://github.blog/2022-05-19-math-support-in-markdown/

I have been following an issue on math support for github markdown (github/markup/issues/274).

One thousand years later ...

Math support in Markdown | The GitHub Blog

https://github.blog/2022-05-19-math-support-in-markdown/

#misc

Quote from this article:

"It doesn’t transmit from person to person as readily, and because it is related to the smallpox virus, there are already treatments and vaccines on hand for curbing its spread. So while scientists are concerned, because any new viral behaviour is worrying — they are not panicked."

https://www.nature.com/articles/d41586-022-01421-8

Quote from this article:

"It doesn’t transmit from person to person as readily, and because it is related to the smallpox virus, there are already treatments and vaccines on hand for curbing its spread. So while scientists are concerned, because any new viral behaviour is worrying — they are not panicked."

https://www.nature.com/articles/d41586-022-01421-8

#ml

Finally... We can now utilize the real power of M1 chips.

Introducing Accelerated PyTorch Training on Mac | PyTorch

https://pytorch.org/blog/introducing-accelerated-pytorch-training-on-mac/

I have been following this issue: https://github.com/pytorch/pytorch/issues/47702#issuecomment-1130162835

There were even some fights. 😂

Finally... We can now utilize the real power of M1 chips.

Introducing Accelerated PyTorch Training on Mac | PyTorch

https://pytorch.org/blog/introducing-accelerated-pytorch-training-on-mac/

I have been following this issue: https://github.com/pytorch/pytorch/issues/47702#issuecomment-1130162835

There were even some fights. 😂

#python

This post is a retro on how I learned Python.

Disclaimer: I can not claim that I am a master of Python. This post is a retrospective of how I learned Python in different stages.

I started using Python back in 2012. Before this, I was mostly a Matlab/C user.

Python is easy to get started, yet it is hard to master. People coming from other languages can easily make it work but will write some "disgusting" python code. And this is because Python people talk about "pythonic" all the time. Instead of being an actual style guide, it is rather a philosophy of styles.

When we get started, we are most likely not interested in [PEP8](https://peps.python.org/pep-0008/) and [PEP257](https://peps.python.org/pep-0257/). Instead, we focus on making things work. After some lectures from the university (or whatever sources), we started to get some sense of styles. Following these lectures, people will probably write code and use Python in some projects. Then we began to realize that Python is strange, sometimes even doesn't make sense. Then we started leaning about the philosophy behind it. At some point, we will get some peer reviews and probably fight against each other on some philosophies we accumulated throughout the years.

The attached drawing (in comments) somehow captures this path that I went through. It is not a monotonic path of any sort. This path is most likely to be permutation invariant and cyclic. But the bottom line is that mastering Python requires a lot of struggle, fights, and relearning. And one of the most effective methods is peer review, just as in any other learning task in our life.

Peer review makes us think, and it is very important to find some good reviewers. Don't just stay in a silo and admire our own code. To me, the whole journey helped me building one of the most important philosophies of my life: embrace open source and collaborate.

This post is a retro on how I learned Python.

Disclaimer: I can not claim that I am a master of Python. This post is a retrospective of how I learned Python in different stages.

I started using Python back in 2012. Before this, I was mostly a Matlab/C user.

Python is easy to get started, yet it is hard to master. People coming from other languages can easily make it work but will write some "disgusting" python code. And this is because Python people talk about "pythonic" all the time. Instead of being an actual style guide, it is rather a philosophy of styles.

When we get started, we are most likely not interested in [PEP8](https://peps.python.org/pep-0008/) and [PEP257](https://peps.python.org/pep-0257/). Instead, we focus on making things work. After some lectures from the university (or whatever sources), we started to get some sense of styles. Following these lectures, people will probably write code and use Python in some projects. Then we began to realize that Python is strange, sometimes even doesn't make sense. Then we started leaning about the philosophy behind it. At some point, we will get some peer reviews and probably fight against each other on some philosophies we accumulated throughout the years.

The attached drawing (in comments) somehow captures this path that I went through. It is not a monotonic path of any sort. This path is most likely to be permutation invariant and cyclic. But the bottom line is that mastering Python requires a lot of struggle, fights, and relearning. And one of the most effective methods is peer review, just as in any other learning task in our life.

Peer review makes us think, and it is very important to find some good reviewers. Don't just stay in a silo and admire our own code. To me, the whole journey helped me building one of the most important philosophies of my life: embrace open source and collaborate.

#fun

Could use this

How to Lie with Statistics - Wikipedia

https://en.wikipedia.org/wiki/How_to_Lie_with_Statistics

Could use this

How to Lie with Statistics - Wikipedia

https://en.wikipedia.org/wiki/How_to_Lie_with_Statistics

#data

Stop squandering data: make units of measurement machine-readable

https://www.nature.com/articles/d41586-022-01233-w

Stop squandering data: make units of measurement machine-readable

https://www.nature.com/articles/d41586-022-01233-w

#ml

https://ts.gluon.ai/

Highly recommended! If you are working on deep learning for forecasting, gluonts is a great package.

It simplifies all these tedious data preprocessing, slicing, backrest stuff. We can then spend time on implementing the models themselves (there're a lot of ready-to-use models).

What's even better, we can use pytorch lightning!

See this repository for a list of transformer based forecasting models.

https://github.com/kashif/pytorch-transformer-ts

https://ts.gluon.ai/

Highly recommended! If you are working on deep learning for forecasting, gluonts is a great package.

It simplifies all these tedious data preprocessing, slicing, backrest stuff. We can then spend time on implementing the models themselves (there're a lot of ready-to-use models).

What's even better, we can use pytorch lightning!

See this repository for a list of transformer based forecasting models.

https://github.com/kashif/pytorch-transformer-ts

#ml

Came across this post this morning. I realized the reason I am not writing a lot in Julia is simply because I don't know how to write quality code in Julia.

When we build a model in Python, we know all these details about making it quality code. For a new language, I'm just terrified by the amount of details I need to be aware of.

Ah I'm getting older.

JAX vs Julia (vs PyTorch) · Patrick Kidger

https://kidger.site/thoughts/jax-vs-julia/

Came across this post this morning. I realized the reason I am not writing a lot in Julia is simply because I don't know how to write quality code in Julia.

When we build a model in Python, we know all these details about making it quality code. For a new language, I'm just terrified by the amount of details I need to be aware of.

Ah I'm getting older.

JAX vs Julia (vs PyTorch) · Patrick Kidger

https://kidger.site/thoughts/jax-vs-julia/

#python

Anaconda open sourced this...

I have no idea what this is for...

https://github.com/pyscript/pyscript

Anaconda open sourced this...

I have no idea what this is for...

https://github.com/pyscript/pyscript

#ml

I heard about information bottleneck so many times but didn't really go back and read the original papers.

I spent some time on it and I found it quite interesting. It is philosophically based on what was described in Vapnik's The Nature of Statistical Learning, where he discussed how generalizations work by enforcing parsimony.

Here in this information bottleneck paper, the most interesting thing is the quantified generalization gap and complexity gap. With these, we know where to go on the information plane.

It's a good read.

Tishby N, Zaslavsky N. Deep Learning and the Information Bottleneck Principle. arXiv [cs.LG]. 2015. Available: http://arxiv.org/abs/1503.02406,

I heard about information bottleneck so many times but didn't really go back and read the original papers.

I spent some time on it and I found it quite interesting. It is philosophically based on what was described in Vapnik's The Nature of Statistical Learning, where he discussed how generalizations work by enforcing parsimony.

Here in this information bottleneck paper, the most interesting thing is the quantified generalization gap and complexity gap. With these, we know where to go on the information plane.

It's a good read.

Tishby N, Zaslavsky N. Deep Learning and the Information Bottleneck Principle. arXiv [cs.LG]. 2015. Available: http://arxiv.org/abs/1503.02406,

#work

I realized something interesting about time management.

If I open my calendar now, I see these “tiles” of meetings filling up most of my working hours. It looks bad, but it was even worse in the past. The thing is, if I do meetings during my working hours, I will have to work extra hours to do some thinking and analysis. It is rather cruel.

So what changed? I think I realized the power of Google Docs. Instead of many people talking and nobody listening, someone should write up a draft first and send it out to the colleagues. Then, once people get the link to the docs, everyone can add comments.

This doesn’t seem to be very different from meetings. Oh, it is very different. The workflow can be async. We are not forced to use our precious focus time to attend meetings. We can read and comment on the document whenever we like: when we are commuting, when we are taking a dump, when we are on a phone/tablet, just, any, time.

Apart from the async workflow, I also like the "think, comment and forget" idea. I feel people deliver better ideas when we think first, comment next, and forget about it unless there are replies to our comments. No pressure, no useless debates.

I realized something interesting about time management.

If I open my calendar now, I see these “tiles” of meetings filling up most of my working hours. It looks bad, but it was even worse in the past. The thing is, if I do meetings during my working hours, I will have to work extra hours to do some thinking and analysis. It is rather cruel.

So what changed? I think I realized the power of Google Docs. Instead of many people talking and nobody listening, someone should write up a draft first and send it out to the colleagues. Then, once people get the link to the docs, everyone can add comments.

This doesn’t seem to be very different from meetings. Oh, it is very different. The workflow can be async. We are not forced to use our precious focus time to attend meetings. We can read and comment on the document whenever we like: when we are commuting, when we are taking a dump, when we are on a phone/tablet, just, any, time.

Apart from the async workflow, I also like the "think, comment and forget" idea. I feel people deliver better ideas when we think first, comment next, and forget about it unless there are replies to our comments. No pressure, no useless debates.

#ml #statistics

I read about conformal prediction a while ago and realized that I need to understand more about the hypothesis testing theories. As someone from natural science, I mostly work within the Neyman-Pearson ideas.

So I explored it a bit and found two nice papers. See the list below. If you have other papers on similar topics, I would appreciate some comments.

1. Perezgonzalez JD. Fisher, Neyman-Pearson or NHST? A tutorial for teaching data testing. Front Psychol. 2015;6: 223. doi:10.3389/fpsyg.2015.00223 https://www.frontiersin.org/articles/10.3389/fpsyg.2015.00223/full

2. Lehmann EL. The Fisher, Neyman-Pearson Theories of Testing Hypotheses: One Theory or Two? J Am Stat Assoc. 1993;88: 1242–1249. doi:10.2307/2291263

I read about conformal prediction a while ago and realized that I need to understand more about the hypothesis testing theories. As someone from natural science, I mostly work within the Neyman-Pearson ideas.

So I explored it a bit and found two nice papers. See the list below. If you have other papers on similar topics, I would appreciate some comments.

1. Perezgonzalez JD. Fisher, Neyman-Pearson or NHST? A tutorial for teaching data testing. Front Psychol. 2015;6: 223. doi:10.3389/fpsyg.2015.00223 https://www.frontiersin.org/articles/10.3389/fpsyg.2015.00223/full

2. Lehmann EL. The Fisher, Neyman-Pearson Theories of Testing Hypotheses: One Theory or Two? J Am Stat Assoc. 1993;88: 1242–1249. doi:10.2307/2291263

https://refactoring.guru/design-patterns/catalog 挺有设计感的 refactoring tutorial #java #refactoring #desginpattern

![UK COVID deaths in 2021 assorted by age group [OC]](/static/https://cdn4.telesco.pe/file/RM9UjXkZFwhtPKNYojq6EJjEO-9rNqXxa_GpHLfPSPGVDoTFl7RjC7FV1CM0-T5mLAGeM_UdNWix9aXToQgwnHycxQhP0K1gh6saSTHH5MylkRy86m8CtcEY4fyO5fYuyxgRpgA1Ts0zKrgr7MNvpqqkppA3_kaFlOil9h3BiUlVUCv_YgdaoUjRFzSf6TuLktfRsAAXL2lZ3kYasv2cV_HS1tPlSoTRSOIzbgNzTc3vfnpjBnZ5MkZGi7GRALr_o1TyK4ICcRDTYeov1zbw5W2X-DfT33Zxrob0gowjF1dGKHlHvCKQJYnyO13tKS4N6DpjXaEZwmcnV1vrYfk3cA.jpg)