Machine learning and other gibberish

See also: https://sharing.leima.is

Archives: https://datumorphism.leima.is/amneumarkt/

See also: https://sharing.leima.is

Archives: https://datumorphism.leima.is/amneumarkt/

Well, "one engineer one month one million lines of code" -> "who the hell knows what's in there" -> "you need some AI coder architect".

https://www.linkedin.com/posts/galenh_principal-software-engineer-coreai-microsoft-activity-7407863239289729024-WTzf

#ai

It's just conflicting thoughts patched together.

AI Is Changing How We Learn at Work

https://hbr.org/2025/12/ai-is-changing-how-we-learn-at-work

It's just conflicting thoughts patched together.

AI Is Changing How We Learn at Work

https://hbr.org/2025/12/ai-is-changing-how-we-learn-at-work

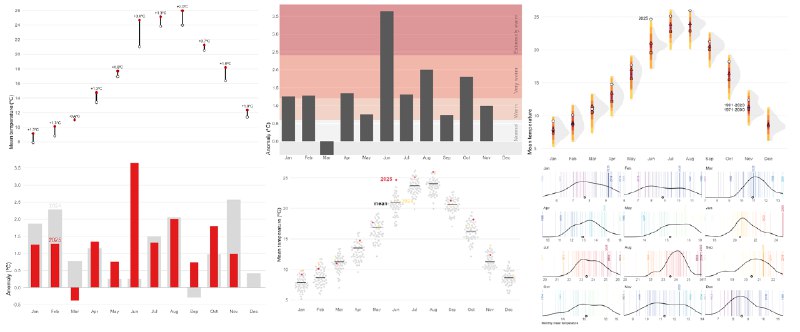

#visualization

Broken Chart: discover 9 visualization alternatives – Dr Dominic Royé

https://dominicroye.github.io/blog/2025-12-14-broken-charts/

Broken Chart: discover 9 visualization alternatives – Dr Dominic Royé

https://dominicroye.github.io/blog/2025-12-14-broken-charts/

There's always something more exciting to explore in our life. If not, we create new games with new rules. This is what we humans are really good at.

Kosmyna, Nataliya, Eugene Hauptmann, Ye Tong Yuan, Jessica Situ, Xian-Hao Liao, Ashly Vivian Beresnitzky, Iris Braunstein, and Pattie Maes. 2025. “Your Brain on ChatGPT: Accumulation of Cognitive Debt When Using an AI Assistant for Essay Writing Task.” arXiv [Cs.AI]. arXiv. http://arxiv.org/abs/2506.08872.

#engineering

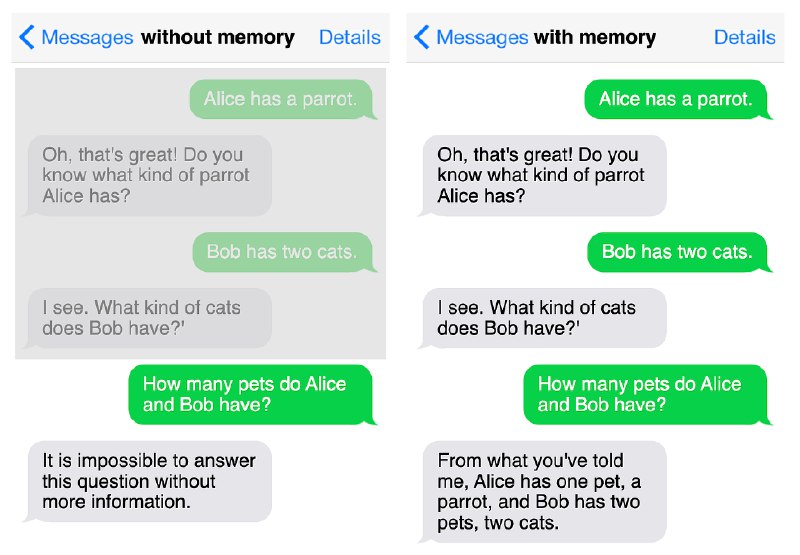

Clear and concise intro.

Making Sense of Memory in AI Agents – Leonie Monigatti

https://www.leoniemonigatti.com/blog/memory-in-ai-agents.html

Clear and concise intro.

Making Sense of Memory in AI Agents – Leonie Monigatti

https://www.leoniemonigatti.com/blog/memory-in-ai-agents.html

#misc

Quote from the following article:

> Scientists want AI partnership but can't yet trust it for core research

Introducing Anthropic Interviewer \ Anthropic https://www.anthropic.com/news/anthropic-interviewer

Quote from the following article:

> Scientists want AI partnership but can't yet trust it for core research

Introducing Anthropic Interviewer \ Anthropic https://www.anthropic.com/news/anthropic-interviewer

#ai

What GitHub imagined about everyone creating their own app is almost here. Github Spark is not that good, vercel ship 0 is also buggy. Cloude is doing a fairly good job here.

The other day I needed a special checklist and instead of writing the code myself, I asked Gemini to do it and it was surprisingly good.

---

Build interactive diagram tools | Claude | Claude

https://www.claude.com/resources/use-cases/build-interactive-diagram-tools

What GitHub imagined about everyone creating their own app is almost here. Github Spark is not that good, vercel ship 0 is also buggy. Cloude is doing a fairly good job here.

The other day I needed a special checklist and instead of writing the code myself, I asked Gemini to do it and it was surprisingly good.

---

Build interactive diagram tools | Claude | Claude

https://www.claude.com/resources/use-cases/build-interactive-diagram-tools

#ai

Interesting... Going from finite Eucleadian space to Hilbert space

https://openreview.net/forum?id=1b7whO4SfY

Interesting... Going from finite Eucleadian space to Hilbert space

https://openreview.net/forum?id=1b7whO4SfY

#data

Last year a colleague introduced Marimo to me. It was surprisingly good after the first few days but then I was annoyed by the auto rerun on expensive computations.

Then I bailed out.

This morning I read a blog on this topic and realized there's a switch...

Time to jump in again.

https://docs.marimo.io/guides/configuration/runtime_configuration/#disable-autorun-on-cell-change-lazy-execution

Last year a colleague introduced Marimo to me. It was surprisingly good after the first few days but then I was annoyed by the auto rerun on expensive computations.

Then I bailed out.

This morning I read a blog on this topic and realized there's a switch...

Time to jump in again.

https://docs.marimo.io/guides/configuration/runtime_configuration/#disable-autorun-on-cell-change-lazy-execution

#dl

Introducing more symmetries in attention

https://github.com/NVIDIA/torch-harmonics

https://neurips.cc/virtual/2025/loc/san-diego/poster/117783

Introducing more symmetries in attention

https://github.com/NVIDIA/torch-harmonics

https://neurips.cc/virtual/2025/loc/san-diego/poster/117783

Lol citing the onion in a research paper...

https://ajba.um.edu.my/index.php/JAT/article/download/11954/7915

#ai

This is more than language models. Somehow many forecasting models also almost fall inside the realm. Somehow the root of this is the latent space. Time series models even classical ones, have enlarged latent space, which is more or less embedding patterns with higher dimensions.

However this paper is a bit fishy. I just can't trust the proof of theorem 2.2.

Language Models are Injective and Hence Invertible

https://arxiv.org/abs/2510.15511

This is more than language models. Somehow many forecasting models also almost fall inside the realm. Somehow the root of this is the latent space. Time series models even classical ones, have enlarged latent space, which is more or less embedding patterns with higher dimensions.

However this paper is a bit fishy. I just can't trust the proof of theorem 2.2.

Language Models are Injective and Hence Invertible

https://arxiv.org/abs/2510.15511

#ai

I ran into this quite interesting paper when exploring embeddings of time series.

In the past, the manifold hypothesis has always been working quite well for me regarding physical world data. You just take a model, compress it, do something in the latent space, decode it, damn it works so well. The latent space is so magical.

To me, the hyperparameters for the latent space has always been some kind of battle between the curse of dimensionality and the Whitney embedding theorem.

Then there comes the language models. The ancient word2vec was already amazing. It brings in the questioin of why embeddings works unbelievably well in language models and it bugs me a lot. If you think about it, regardless of the model, embedding has been working so well. This hints that language embeddings might be universal. There is the linear representation hypothesis, but it is weird as it is missing the global structure. This paper provides a bit more clarity. The authors used a lot of assumptions but the proposal is interesting in the sense that the cosine similarity we used is likely a tool that depends on the distance on the manifold of the continuous features in the backstage.

https://arxiv.org/abs/2505.18235v1

I ran into this quite interesting paper when exploring embeddings of time series.

In the past, the manifold hypothesis has always been working quite well for me regarding physical world data. You just take a model, compress it, do something in the latent space, decode it, damn it works so well. The latent space is so magical.

To me, the hyperparameters for the latent space has always been some kind of battle between the curse of dimensionality and the Whitney embedding theorem.

Then there comes the language models. The ancient word2vec was already amazing. It brings in the questioin of why embeddings works unbelievably well in language models and it bugs me a lot. If you think about it, regardless of the model, embedding has been working so well. This hints that language embeddings might be universal. There is the linear representation hypothesis, but it is weird as it is missing the global structure. This paper provides a bit more clarity. The authors used a lot of assumptions but the proposal is interesting in the sense that the cosine similarity we used is likely a tool that depends on the distance on the manifold of the continuous features in the backstage.

https://arxiv.org/abs/2505.18235v1

#misc

Attention Authors: Updated Practice for Review Articles and Position Papers in arXiv CS Category

https://blog.arxiv.org/2025/10/31/attention-authors-updated-practice-for-review-articles-and-position-papers-in-arxiv-cs-category/

Attention Authors: Updated Practice for Review Articles and Position Papers in arXiv CS Category

https://blog.arxiv.org/2025/10/31/attention-authors-updated-practice-for-review-articles-and-position-papers-in-arxiv-cs-category/

AI to juniors is more or less a "fuck you in particular" thingy.

https://digitaleconomy.stanford.edu/publications/canaries-in-the-coal-mine/