Machine learning and other gibberish

See also: https://sharing.leima.is

Archives: https://datumorphism.leima.is/amneumarkt/

See also: https://sharing.leima.is

Archives: https://datumorphism.leima.is/amneumarkt/

#ts

I love the last paragraph, especially this sentence:

> Unfortunately, I can’t continue my debate with Clive Granger. I rather hoped he would come to accept my point of view.

Rob J Hyndman - The difference between prediction intervals and confidence intervals

https://robjhyndman.com/hyndsight/intervals/

I love the last paragraph, especially this sentence:

> Unfortunately, I can’t continue my debate with Clive Granger. I rather hoped he would come to accept my point of view.

Rob J Hyndman - The difference between prediction intervals and confidence intervals

https://robjhyndman.com/hyndsight/intervals/

#data

Quite useful.

I use pyarrow a lot and also a bit of polars. Mostly because pandas is slow. With the new 2.0 release, all three libraries are seamlessly connected to each other.

https://datapythonista.me/blog/pandas-20-and-the-arrow-revolution-part-i

Quite useful.

I use pyarrow a lot and also a bit of polars. Mostly because pandas is slow. With the new 2.0 release, all three libraries are seamlessly connected to each other.

https://datapythonista.me/blog/pandas-20-and-the-arrow-revolution-part-i

#ai

The performance is not too bad. But…given this is about academic topics, it sounds terrible to have this level of hallucination.

https://bair.berkeley.edu/blog/2023/04/03/koala/

The performance is not too bad. But…given this is about academic topics, it sounds terrible to have this level of hallucination.

https://bair.berkeley.edu/blog/2023/04/03/koala/

#ai

A lot of big names signed it. (Not sure how they verify the signee though)

Personally, I'm not buying it.

https://futureoflife.org/open-letter/pause-giant-ai-experiments/

A lot of big names signed it. (Not sure how they verify the signee though)

Personally, I'm not buying it.

https://futureoflife.org/open-letter/pause-giant-ai-experiments/

#dl

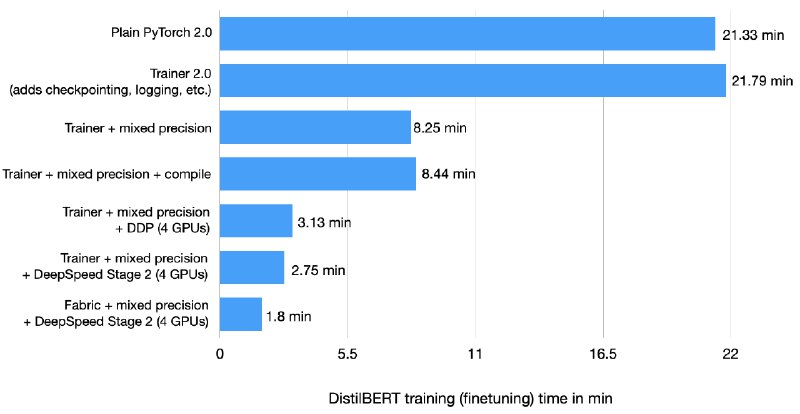

I am experimenting with torch 2.0 and searching for potential training time improvements in lightning. The following article provides a very good introduction.

https://lightning.ai/pages/community/tutorial/how-to-speed-up-pytorch-model-training/

I am experimenting with torch 2.0 and searching for potential training time improvements in lightning. The following article provides a very good introduction.

https://lightning.ai/pages/community/tutorial/how-to-speed-up-pytorch-model-training/

#ml

Pérez J, Barceló P, Marinkovic J. Attention is Turing-Complete. J Mach Learn Res. 2021;22: 1–35. Available: https://jmlr.org/papers/v22/20-302.html

Pérez J, Barceló P, Marinkovic J. Attention is Turing-Complete. J Mach Learn Res. 2021;22: 1–35. Available: https://jmlr.org/papers/v22/20-302.html

This is how generative AI is changing our lives. Now thinking about it, those competitive advantages from our satisfying technical skills are fading away.

What shall we invest into for a better career? Just integrated whatever is coming into our workflow? Or fundamentally change the way we are thinking?

#dl

https://github.com/Lightning-AI/lightning/releases/tag/2.0.0

You can compile (torch 2.0) LightningModule now.

https://github.com/Lightning-AI/lightning/releases/tag/2.0.0

You can compile (torch 2.0) LightningModule now.

import torch

import lightning as L

model = LitModel()

# This will compile forward and {training,validation,test,predict}_step

compiled_model = torch.compile(model)

trainer = L.Trainer()

trainer.fit(compiled_model)#ml

https://mlcontests.com/state-of-competitive-machine-learning-2022/

Quote from the report:

Successful competitors have mostly converged on a common set of tools — Python, PyData, PyTorch, and gradient-boosted decision trees.

Deep learning still has not replaced gradient-boosted decision trees when it comes to tabular data, though it does often seem to add value when ensembled with boosting methods.

Transformers continue to dominate in NLP, and start to compete with convolutional neural nets in computer vision.

Competitions cover a broad range of research areas including computer vision, NLP, tabular data, robotics, time-series analysis, and many others.

Large ensembles remain common among winners, though single-model solutions do win too.

There are several active machine learning competition platforms, as well as dozens of purpose-built websites for individual competitions.

Competitive machine learning continues to grow in popularity, including in academia.

Around 50% of winners are solo winners; 50% of winners are first-time winners; 30% have won more than once before.

Some competitors are able to invest significantly into hardware used to train their solutions, though others who use free hardware like Google Colab are also still able to win competitions.

https://mlcontests.com/state-of-competitive-machine-learning-2022/

Quote from the report:

Successful competitors have mostly converged on a common set of tools — Python, PyData, PyTorch, and gradient-boosted decision trees.

Deep learning still has not replaced gradient-boosted decision trees when it comes to tabular data, though it does often seem to add value when ensembled with boosting methods.

Transformers continue to dominate in NLP, and start to compete with convolutional neural nets in computer vision.

Competitions cover a broad range of research areas including computer vision, NLP, tabular data, robotics, time-series analysis, and many others.

Large ensembles remain common among winners, though single-model solutions do win too.

There are several active machine learning competition platforms, as well as dozens of purpose-built websites for individual competitions.

Competitive machine learning continues to grow in popularity, including in academia.

Around 50% of winners are solo winners; 50% of winners are first-time winners; 30% have won more than once before.

Some competitors are able to invest significantly into hardware used to train their solutions, though others who use free hardware like Google Colab are also still able to win competitions.

Great thread on how a communication failure contributed to SVB’s collapse.

https://twitter.com/lulumeservey/status/1634232322693144576

https://twitter.com/lulumeservey/status/1634232322693144576