Machine learning and other gibberish

See also: https://sharing.leima.is

Archives: https://datumorphism.leima.is/amneumarkt/

See also: https://sharing.leima.is

Archives: https://datumorphism.leima.is/amneumarkt/

Diffusion Models for Handwriting Generation

https://github.com/tcl9876/Diffusion-Handwriting-Generation

What is going on with trolli? Was ist passiert?

https://www.visualcapitalist.com/gen-z-favorite-brands-compared-with-older-generations/

Liu, Yong, Haixu Wu, Jianmin Wang, and Mingsheng Long. 2022. “Non-Stationary Transformers: Exploring the Stationarity in Time Series Forecasting.” ArXiv [Cs.LG], May. https://doi.org/10.48550/ARXIV.2205.14415.

https://github.com/thuml/Nonstationary_Transformers

#ml

https://arxiv.org/abs/2210.10101v1

Bernstein, Jeremy. 2022. “Optimisation & Generalisation in Networks of Neurons.” ArXiv [Cs.NE], October. https://doi.org/10.48550/ARXIV.2210.10101.

https://arxiv.org/abs/2210.10101v1

Bernstein, Jeremy. 2022. “Optimisation & Generalisation in Networks of Neurons.” ArXiv [Cs.NE], October. https://doi.org/10.48550/ARXIV.2210.10101.

#ml

😱😱😱 Fancy

Video: https://fb.watch/giO0tV4N4T

Press: https://research.facebook.com/publications/dressing-avatars-deep-photorealistic-appearance-for-physically-simulated-clothing/

Xiang, Donglai, Timur Bagautdinov, Tuur Stuyck, Fabian Prada, Javier Romero, Weipeng Xu, Shunsuke Saito, et al. 2022. “Dressing Avatars: Deep Photorealistic Appearance for Physically Simulated Clothing.” ArXiv [Cs.GR], June. https://arxiv.org/abs/2206.15470

😱😱😱 Fancy

Video: https://fb.watch/giO0tV4N4T

Press: https://research.facebook.com/publications/dressing-avatars-deep-photorealistic-appearance-for-physically-simulated-clothing/

Xiang, Donglai, Timur Bagautdinov, Tuur Stuyck, Fabian Prada, Javier Romero, Weipeng Xu, Shunsuke Saito, et al. 2022. “Dressing Avatars: Deep Photorealistic Appearance for Physically Simulated Clothing.” ArXiv [Cs.GR], June. https://arxiv.org/abs/2206.15470

#ml

https://developer.nvidia.com/blog/how-optimize-data-transfers-cuda-cc/

I find this post very useful. I have always wondered what happens after my dataloader prepared everything for the GPU. I didn’t know that CUDA has to copy the data again to create page-locked memory.

I used to set pin_memory=True in a PyTorch DataLoader and benchmark it. To be honest, I have only observed very small improvements in most of my experiments. So I stopped caring about pin_memory.

After some digging, I also realized that performance from setting pin_memory=True in DataLoader is ticky. If we don’t use multiprocessing nor reuse the page-locked memory, it is hard to expect any performance gain.

(some other notes: https://datumorphism.leima.is/cards/machine-learning/practice/cuda-memory/)

https://developer.nvidia.com/blog/how-optimize-data-transfers-cuda-cc/

I find this post very useful. I have always wondered what happens after my dataloader prepared everything for the GPU. I didn’t know that CUDA has to copy the data again to create page-locked memory.

I used to set pin_memory=True in a PyTorch DataLoader and benchmark it. To be honest, I have only observed very small improvements in most of my experiments. So I stopped caring about pin_memory.

After some digging, I also realized that performance from setting pin_memory=True in DataLoader is ticky. If we don’t use multiprocessing nor reuse the page-locked memory, it is hard to expect any performance gain.

(some other notes: https://datumorphism.leima.is/cards/machine-learning/practice/cuda-memory/)

#ml

Amazon has been updating their Machine Learning University website. It is getting more and more interesting.

They have added an article about linear regression recently. There is a section in this article about interpreting linear models and it is just fun.

https://mlu-explain.github.io/

( Time machine: https://t.me/amneumarkt/293 )

Amazon has been updating their Machine Learning University website. It is getting more and more interesting.

They have added an article about linear regression recently. There is a section in this article about interpreting linear models and it is just fun.

https://mlu-explain.github.io/

( Time machine: https://t.me/amneumarkt/293 )

#showerthoughts

I've never thought about dark mode in LaTeX. It sounds weird at first, but now thinking about this, it's actually a great style.

This is a dark style from Dracula.

https://draculatheme.com/latex

I've never thought about dark mode in LaTeX. It sounds weird at first, but now thinking about this, it's actually a great style.

This is a dark style from Dracula.

https://draculatheme.com/latex

#ML

This is interesting.

Toy Models of Superposition. [cited 15 Sep 2022]. Available: https://transformer-circuits.pub/2022/toy_model/index.html#learning

This is interesting.

Toy Models of Superposition. [cited 15 Sep 2022]. Available: https://transformer-circuits.pub/2022/toy_model/index.html#learning

Germany is so small.

My GitHub profile ranks 102 in Germany by public contributions.

https://github.com/gayanvoice/top-github-users

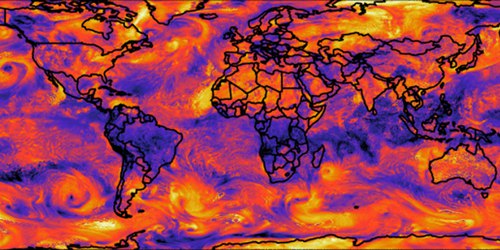

> Tracking of an Eagle over a 20 year period.

Source:

https://twitter.com/Loca1ion/status/1566346534651924480?s=20&t=AKXn9U-L3fyhrJzeAXySlA

#fun

Some results from the stable difussion model. See comments for some examples.

https://huggingface.co/CompVis/stable-diffusion

Some results from the stable difussion model. See comments for some examples.

https://huggingface.co/CompVis/stable-diffusion

Hmm not so many contributions from wild animals.

Source: https://www.weforum.org/agenda/2021/08/total-biomass-weight-species-earth

Data from this paper: https://www.pnas.org/doi/10.1073/pnas.1711842115#T1

#ml

https://ai.googleblog.com/2022/08/optformer-towards-universal.html?m=1

I find this work counter intuitive. They took some descriptions of the optimization in machine learning and trained a transformer to "guesstimate" the hyperparameters of a model.

I understand that human being has some "feeling" of the hyperparameters after working with the data and model for a while. But it is usually hard to extrapolate such knowledge when we have completely new data and models.

I guess our brain is doing some statistics based on our historical experiments. And we call this intuition. My "intuition" is that there is little generalizable knowledge in this problem. 🙈 It would have been so great if they investigated the saliency maps.

https://ai.googleblog.com/2022/08/optformer-towards-universal.html?m=1

I find this work counter intuitive. They took some descriptions of the optimization in machine learning and trained a transformer to "guesstimate" the hyperparameters of a model.

I understand that human being has some "feeling" of the hyperparameters after working with the data and model for a while. But it is usually hard to extrapolate such knowledge when we have completely new data and models.

I guess our brain is doing some statistics based on our historical experiments. And we call this intuition. My "intuition" is that there is little generalizable knowledge in this problem. 🙈 It would have been so great if they investigated the saliency maps.

#fun

I became a beta tester of DALLE. Played with it for a while and it is quite fun. See the comments for some examples.

Comment if you would like to test some prompts.

I became a beta tester of DALLE. Played with it for a while and it is quite fun. See the comments for some examples.

Comment if you would like to test some prompts.

#fun

> participants who spent more than six hours working on a tedious and mentally taxing assignment had higher levels of glutamate — an important signalling molecule in the brain. Too much glutamate can disrupt brain function, and a rest period could allow the brain to restore proper regulation of the molecule

https://www.nature.com/articles/d41586-022-02161-5

> participants who spent more than six hours working on a tedious and mentally taxing assignment had higher levels of glutamate — an important signalling molecule in the brain. Too much glutamate can disrupt brain function, and a rest period could allow the brain to restore proper regulation of the molecule

https://www.nature.com/articles/d41586-022-02161-5